OpenAI has released its next version of the AI model GPT-4. The newest update in the model makes a new leap forward in the OpenAI’s chatbot world. The latest version of the GPT comes with a powerful new image and text-understanding model. However, the updated version of the GPT is currently made available only for ChatGPT Plus users.

The older generation of GPT has swept the world away with its deep language learning and witty conversations. Although, the model didn’t have the ability to understand images and translate them into the text format. With the announcement of the 4th generation of an AI model, OpenAI has taken its artificial intelligence to the next stage.

GPT-4 from OpenAI New Advancements and Image Recognition

It produces text outputs from inputs that contain both interleaved text and graphics, according to a recent article by OpenAI. GPT-4

displays identical capabilities as it does on text-only inputs throughout a range of domains, including documents containing text and photos, diagrams, or screenshots.

Nevertheless, owing to misuse concerns, the business is postponing the deployment of its image-description capability, and the GPT-4 version that is available to subscribers of OpenAI’s ChatGPT Plus service only supports text.

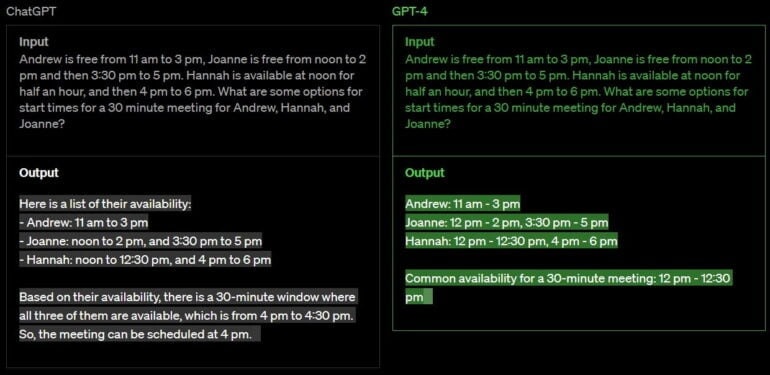

GPT-4 performs at the “human level” on a variety of professional and academic benchmarks, can create text, and can take both text and picture inputs. An upgrade above GPT-3.5, which only accepted text. For instance, GPT-4 successfully completes a mock bar exam with a score in the top 10% of test takers, but GPT-3.5 received a score in the bottom 10%.

In a blog article, OpenAI said that GPT-4 may still make the same mistakes as earlier iterations. The mistakes made were such as “hallucinating” gibberish, upholding societal prejudices, and dispensing poor advice.

It also “does not learn from its experience,” making it difficult for individuals to teach it new things, and it is unaware of events that occurred after around September 2021, when its training data was finished.

Microsoft’s Plans on GPT’s Video AI Model Kosmos-1

Despite of the drawbacks, it is the anticipation that its technology will become a secret weapon for its office software, search engine, and other internet goals. The tech giant Microsoft has spent billions of dollars on OpenAI.

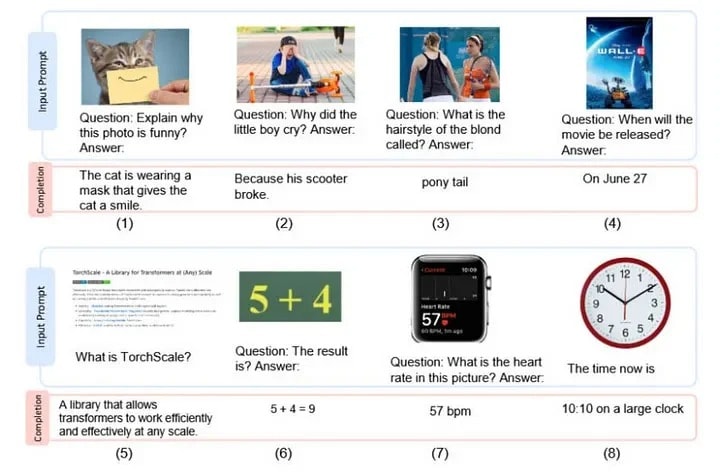

Kosmos-1, a multi-modal language model from Microsoft that works with several formats, has previously been introduced. The AI in the Kosmos-1 presentation can interpret pictures in addition to text. For instance, the AI is asked, “What time is it now?.” After receiving an image of a clock displaying the time as 10:10. The AI responds, “10:10 on a large clock,” to that.

Moreover, the model has the ability to identify a specific hairdo that a lady is sporting. Identifies a movie poster and notifies the user when the movie will be released.

Andreas Braun, chief technical officer for Microsoft Germany, stated last week that GPT-4 will “provide entirely other possibilities, including films.” Nevertheless, according to today’s release, GPT-4 does not mention video. The sole multimodal component is the input of photos, which is far less than anticipated.