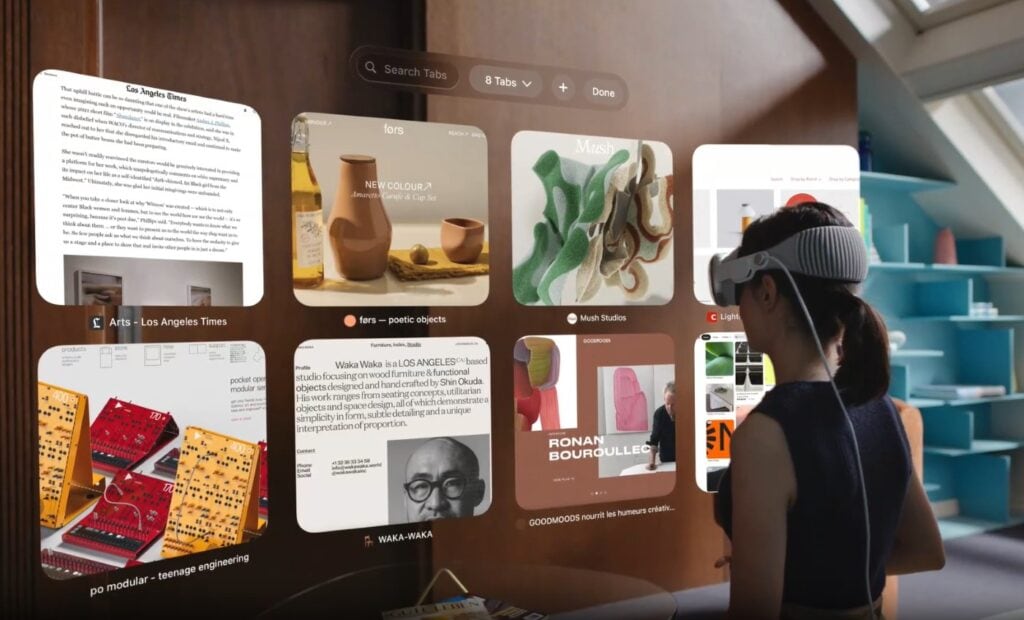

Apple has entered the Augmented and Virtual Reality segment completely with its Vision Pro headset. The WWDC23 event not only showcased the new advancements in software but Apple has even announced their new AR/VR headset. Furthermore, the event even featured the new 15-inch Macbook Air powered by their M2 chipset.

All things aside, Apple has released developer tools for its Vision Pro headset to help developers create applications for the device. The Vision Pro headset runs on the visionOS software and only features Apple’s core application. However, this will change as the tech giant has opened its door for third-party developers to create new applications.

Apple in its blog post about the release of visionOS SDK said:

Starting today, Apple’s global community of developers will be able to create an entirely new class of spatial computing apps that take full advantage of the infinite canvas in Vision Pro and seamlessly blend digital content with the physical world to enable extraordinary new experiences.

With the visionOS SDK, developers can utilize the powerful and unique capabilities of Vision Pro and visionOS to design brand-new app experiences across a variety of categories including productivity, design, gaming, and more.

Developer tools for visionOS released on Apple Vision Pro

At the announcement of Vision Pro at WWDC, Apple promised to bring a set of powerful tools for the developers of its visionOS. Now, the time has arrived as a new press release was posted by the company regarding the availability of the visionOS SDK for developers. The SDK tools are made available at least half a year before the official sale of the Vision Pro headset.

The SDK of the Vision Pro with visionOS comes with the same basic framework as the other operating systems of Apple devices. The dev tools used include SwiftUI, Xcode, RealityKit, ARKit, and TestFlight. Apple has made it easier for the already existing developers to jump over and create compatible applications for their Vision headsets.

Furthermore, Apple has added a new tool called Reality Composer Pro along with the existing developer tools. Developers can easily preview 3D models, images, sounds, and animation using the Xcode feature. Moreover, Apple has provided a simulator that will give a virtual approximation without having any hardware devices.

In the coming days, Apple will even add the Unity development tools giving more importance to developers that want to create games for the headset. The presentation provided at the WWDC23 didn’t showcase much of the gaming experience on the Vision Pro. Developers can start building games using the Unity development tools which will arrive soon in the SDK.

In other news, Apple has announced that it will open more developer labs next month. These labs will soon arrive in cities like Cupertino, London, Munich, Shanghai, Singapore, and Tokyo. These labs will help developers to have a hands-on experience with the Vision Pro hardware.

This will help the devs to have a clear idea regarding the support and experience they can provide for Vision Pro users.