Apple previews iOS 17 accessibility features ahead of the WWDC event for this year. These new software features for cognitive, speech, and vision accessibility will be available on iOS 17, iPadOS 17, and macOS 14. These new features will arrive in Apple devices later this year after the preview at WWDC in early June this year.

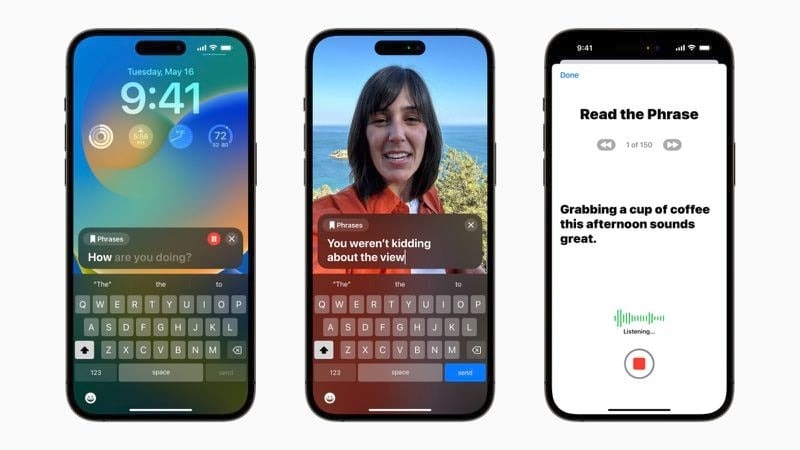

One of the new features from Apple is for people who are at risk of losing their ability to speak and create a voice. The “Personal Voice” feature comes as a part of the accessibility options. It’s a feature that can create a personalized voice that sounds very natural. Furthermore, the tech giant has even announced another feature that lets you type the message and read it out loud.

Apple Previews iOS 17 Accessibility Features Ahead of WWDC

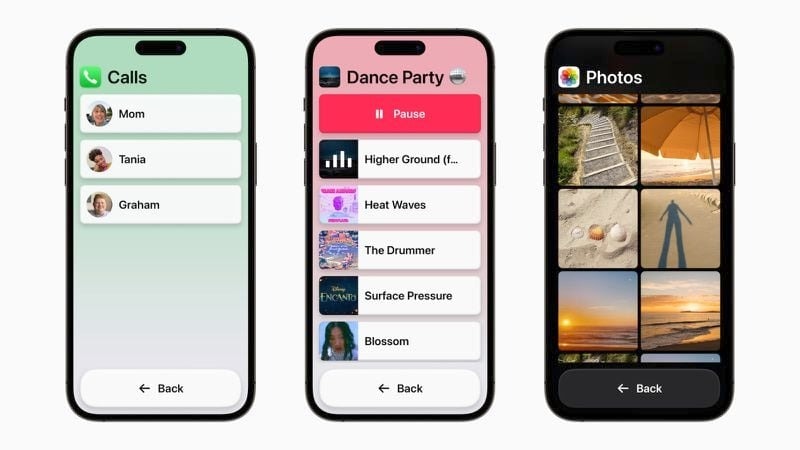

The new Accessibility features of the iOS 17 include Assistive Access which tones down the full-fledged experience of iOS and showcases a simple UI. The Assistive Access mode comes with a customized experience that combines the Phone and FaceTime for making calls. By enabling this feature, the UI will have high-contrast buttons and large text labels.

In the latest iOS 17, the accessibility features will have Live Speech on iPhone, iPad, and Mac devices. The Live Speech feature will allow users to type what they want to say and it will speak out during phone and FaceTime calls just like in-person conversations. The feature becomes an advantage for people diagnosed with ALS that have a risk of losing their ability to speak.

Users can read along the set of randomized set of text as prompted to record 15 minutes of audio on iPhone and iPad. The device will use machine learning in keeping the information of the users secure and private. The ML will even integrate with Live Speech for users that want to speak with their Personal Voice.

The last accessibility feature is the Detection Mode in Magnifier for the Point and Speak. The Magnifier App on iOS with Point and Speak feature will help users interact with physical objects. If the physical objects have any text label, the user can directly point the camera at the same. The feature will announce the text on the object as the user points a finger at the text label.

The Point and Speak will take advantage of the LiDAR scanner on the iPhone for making read-out-loud actions. The feature works with VoiceOver and other options that are available on the Magnifier application. Users with disabilities make use of People Detection, Door Detection, and Image descriptions for navigating through surroundings more virtually.